You didn't understand (or I explained it poorly).

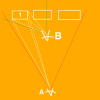

Consider this diagram:

View attachment 167521

You are photographing 3 buildings. At location

A, they all fit in a single frame. The red lines are your FOV. Look at the 3 white lines from

A, showing how the front and side of building 1 project toward that point. Note that at this location, you will capture some background between those buildings, most of the image of that building will be the front, with a narrow, skewed view of the side.

Now, in an attempt to take the same image but with higher resolution, you move to

B, and shoot a three-image pano from a single location to stitch together. The green lines represent the same FOV as before, now turned to the left for the first image.

The three white lines from

B represent the same front and side projection of building 1, but from this closer location. This portion of the future composite image is not only distorted by the wide-angle lens, which can be corrected, but the image content is different, which can't be corrected. In the distant capture, the back corner of 1 and some background between buildings is visible. In the image captured up close, the portion of the image subtended by the side is much wider and almost as much as the front. Also the back corner of building 1 and background between the buildings is obscured by the middle building.

A set of 3 images taken at the right 3 locations across the front of the buildings and then stitched together will reproduce the single-frame distant image more faithfully, and reduce the content looking like a projection on the inside of a cylinder.

For a real situation you can diagram this out, do the math, and figure out the correct locations to take the 3 pictures. A lot of work but can be more than worth it for some applications.

Less work is to eyeball it. For this scene you know what it looks like between the buildings from the single frame shot, so from the near distance slide (roll) side to side until it looks about like that, shoot the first shot, move to the middle, shoot the second, repeat for the third, each time trying to position side to side so the frame looks similar to that third of the distant image.

Or, use the pano feature. Or any amount in between. Really depends on what you need.