30fps video is a bit smoother than 24fps and becoming more of a standard now that film has moved into the digital world and is viewed more in living rooms than on big screens.

There is virtualy no theatrical content is shot at 30p, even though they could. A number of years ago there were some experimental theatrical releases at 48p in some selected theaters. They were not well accepted by audiences, who have become conditioned to narrative content with the 24p characteristics. 30p and 60p are associated with live TV.

Real 24p causes problems in video transfer, which has several native frame rates that are not even multiples, but everything must end up at either 25, 30 or 60fps for video. 24fps doesn't convert to 30fps without a major cadence bump, and what device does that and how sophisticated it is creates a bit of a wild situation, from very good to not so much. High end transfers are, of course, done right.

Then there's actual 24p video. When you output 24p video directly to a display, there's still very possibly a frame rate conversion in the display. There is a lot of video processing power in today's displays, even the low cost ones. You've probably noticed the horribly "motion smoothing" interpolation processing that's on by default in some Tvs. It's just awful, and should always be turned off. But if you're shooting for anyone but yourself, you don't have that control. That's where 24p is just a disadvantage. If you provide at least 30p, the motion smoothing won't do so much damage.

Even if the display can do 24p natively there's a new problem. In 24fps film, which is what 24p tries to emulate, projection actually shows each frame twice with equal exposure and blackout times. What you see has a 48Hz flicker on a 24fps image. Digital Cinema projectors emulate that shutter. Consumer video devices rarely duplicate that, so attempts to shoot video at 24p generally falls short, often very short, of the film emulation that is desired. But you can always shoot your own tests. That's what I do with every camera, even the ones I rent for jobs. Some just don't do 24p well at all, actually very few do it well. Then there's the display of course.

Its also good practice to learn how to apply the 180 degree rule. By setting the shutter speed to 1/60th a second for 30fps, just the right amount of motion blur is applied. This avoids the soap opera effect where everything is fully in focus at all times and judder that occurs otherwise. As Hands Down mentioned ND filter will be a must on the

Mini 3 Pro to slow the shutter down and expose correctly, because you can't adjust the aperture like on

Mavic 2 Pro or

Mavic 3.

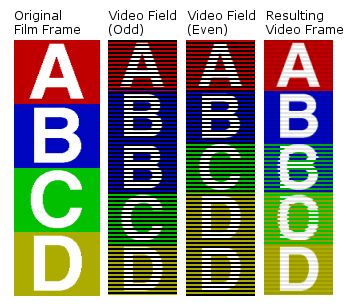

Well, close. The "soap opera" effect is not primarily a shutter speed issue, but a frame rate issue. Broadcast TV in the USA is 30p, even in the SD analog days, though it was 60 fields interlaced (60i). The result was and is ultra fluid motion. Remember, the "p" stands for "progressive" scanning, where a sensor is scanned from the top down, making the effective shutter interval approximately 1/60, though in many early camera systems there was a time difference from the top to the bottom. Many digital imaging systems now simulate a mechanical shutter, even if the sensor is read progressively, and that mechanical shutter simulation can usually be turned on and off in a menu, though haven't seen that option on a drone camera so far.

What a longer shutter interval does is allow a certain amount of motion blur, which makes moving elements appear to move more fluidly. That's true at any frame rate, but more critical at the lower rates like 24 or 30. A short duration shutter makes motion look choppy, and moving elements very sharp. This was used extensively as an effect in Gladiator during fight scenes where Ridly wanted to add a sense of speed and confusion. It wasn't used in the entire film, just fight scenes. It looked like a 1/500 shutter or faster at 24p. The high shutter speed effect became something of a visual motif for a few years showing up in other films and TV commercials until everyone finally burned out on it. Where it doesn't work for us shooting drone video is, we can have a lot of elements moving quickly, and motion blur helps make those elements appear smooth. 180 degree shutter to the rescue. And yes, you do need ND filters to slow the shutter down.

There are those that will argue that higher frame rates are what's needed for more realism, and I don't disagree up to a point. That point is probably 60p with a 180 degree shutter timing. Higher rates have been tried of course, but the advantage is tiny. The obvious trade off is file size vs time. 60p files get huge, it makes editing on a modest computer clumsy and slow. Black Magic Design makes a couple of speed test apps that let you test your system, and will report what formats and frame rates it can handle in the real world. Mac only, sorry.

As to putting multiple formats and frame rates in an editor, it depends on the software, but the major players will all create compatible proxy files when you do that, so outside of the difference in frame rate being visible, you can cut and will, and everything plays at the right speed. Be aware that it will take at least some time for the software to render the new proxy files, so if you're pressed for time in post, stay in the same frame rate and codec, and set your project parameters to match so nothing has to get rendered. And rendering may occur after certain editing operations too.

Not that it matters, but I shoot mostly 4K/30, but if my post time is short and 4K isn't mandatory, I still shoot in 1080p/30 occasionally. My cameras are all set to inherently simulate mechanical shutters at 180 degrees where possible. The drones are the exceptions, just a pain to mess with ND filters, but the results are worth it.