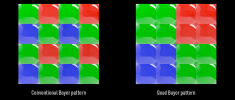

Quad Bayer sensors are very interesting - they are basically an oversized version of a Bayer sensor with each R, G, or B color section covered by 4 photodiodes (instead of one), each having their own microlens and each can behave as an individual pixel. They are also very well suited to video applications.

They have 3 'tricks':

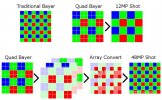

1) Operate at 1/4 the resolution to improve the signal to noise ratio (binning). In simpler terms this is basically a 4X noise reduction. In this case of the

M3P, that would be it's 12MP mode.

2) They can read out every second row of the sensor slightly earlier than the previous to improve highlight information, and combine with the rest of the data to improve DR. This is how the

M3P does it's baked-in HDR shooting. Again this only works at 1/4 the resolution, so up to 12MP for the

M3P. This is not a problem because 4K is around 8MP. This is what makes them attractive for video applications.

3) All pixels are used, and the data is approximated (re-mosaiced) back into traditional Bayer data but with less precision than a traditional Bayer sensor. So you end up with a 48MP image with a noticeable quality

increase from the 12MP version, but a noticeable quality

decrease compared to a traditional 48MP Bayer sensor.

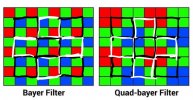

When you re-mosaic a regular Bayer sensor, the color data is closer together and you get a much more precise result when the image is reconstructed. When you re-mosaic Quad Bayer sensor data, the process is less precise (the pixel color data is twice as far apart) so that is why you do not get an image that actually has 4X the color resolution even though the final result is still 48MP.

It's not entirely unlike upscaling resolution, which is an example more people might be familiar with (I'm not saying its the same thing). When you play 1080P content on your 4K TV, you are still seeing ~8 million pixels, but that image was built from 2MP of data instead of 8MP. It looks good, but not as good as native 4K, because the input is still only 2MP. A quad Bayer sensor is still working with much less color resolution because each color patch shares 4 subpixels, even though it has 4X the individual pixels.

Another way to look at it is with an extreme example. Lets say you had a 48MP sensor with only 4 color patches, each patch containing 12 million pixels. You still have 48 million individual pixels, but when you go to reconstruct the image, you would have color data with a precision level far too low to be useful. So the sensor in the

M3P is technically 48MP, but the color information it has to work with to reconstruct the image is diluted, which reduces image quality compared to a traditional 48MP Bayer sensor.

If you want to look at some real world examples, look at a 12MP and 48MP

M3P photo at 100%, and then go compare a 12MP and ~45MP photo from a full frame camera (there are no 48MP FF cameras but it's close enough). Both comparisons are apples to apples as long as you're comparing the same sensor sizes to one another. You will see a night and day difference between a 4X resolution increase via Quad Bayer and a 4X resolution increase with a traditional Bayer.

None of this means the sensor in the

M3P is bad, quite the opposite as Quad Bayer has some unique advantages for video applications, but strictly from a stills photography standpoint, a 48MP image from a traditional Bayer sensor is objectively better than that of a Quad Bayer sensor because the re-mosaic is done with a greater degree of precision. This is also why you do not see Quad Bayer sensors in the very best DSLRs and Mirrorless cameras designed primarily for stills photography, however some of the very best video-centric cameras (such as the Sony A7SIII) use Quad Bayer sensors.